So I have finished putting in environment mapping - I might tweak the shader a bit more as right now I am doing 100% reflectivity whereas elemental mercury should be around 70%. However, I think the texture already goes a long way in help to sell the mercury effect.

Here's a quick vid of the classic glut teapot rendered with environment cube mapping

I have also gotten the improved algorithm to a more stable point. Unfortunately, it's still not real time for 100 particles, but I was at least able to visualize it at a sluggish .1 fps for 100 and realtime for 10 particles. Optimizing on my spatial hashing code should further speed up the algorithm. Also, I am going to try a quick change to the data structure storing the grid point to sample which should also result in a speedup (using a list instead of a set, creating it in linear time, then sorting in O(nlogn) and then iterating through and copying into a new list to get rid of duplicates.

Here's two videos demonstrating the real time 10-particle mercury and the 100-particle mercury sped up to real time.

Anyway, as you can see from the video, the more refined implicit surface reconstruction combo'd with the environment mapping goes a long in selling the material as mercury. Also, the volume introduced by the surface helps add to the surface tension-like blobbiness of the mercury by giving additional depth to the material.

Some sources that were a huge help in understanding environment mapping and actually getting glsl shaders to work:

http://elf-stone.com/glee.php

-GLee for getting openGL extensions to work

http://www.lonesock.net/soil.html

-SOIL for loading in cube maps

http://tfc.duke.free.fr/

-a great environment mapping demo which was a huge help as a base for my code

http://www.lighthouse3d.com/tutorials/glsl-tutorial/?intro

- a good intro to understanding glsl

http://nehe.gamedev.net/data/articles/article.asp?article=21

- another thorough article on understanding glsl in general

http://www.ozone3d.net/tutorials/glsl_texturing_p04.php

- a good environment cube mapping tutorial for glsl

a href="http://3dshaders.com/home/index.php?option=com_weblinks&catid=14&Itemid=34"

- lots of glsl resources and shader code useful as a reference

http://www.cse.ohio-state.edu/~hwshen/781/Site/Lab4.html

- another helpful tutorial on setting up environment cube mapping in openGL/glsl

http://www.codemonsters.de/home/content.php?show=cubemaps

- awesome resource for images to use in creating cube maps; currently I am using one of the ones from NVIDIA.

This will likely be my last post before the presentation and poster session on Thursday, as with Passover just ending, things have been really crazy and I'm still working on finishing up the poster and video and making some final tweaks before the presentation. As always, any comments or feedback is much appreciated!

Wednesday, April 27, 2011

Thursday, April 21, 2011

Texturing and Environment Mapping

As progress has been somewhat limited due to Passover and my current internet connection currently being rather slow, and also due to the fact that I will undoubtedly be posting again later this week, this will be one of the shorter blog posts - at least till I can update with pretty pictures of my progress. I have currently made a lot of headway on implementing my plan for massively culling the search space for the marching cubes algorithm, but am still bottlenecking on the spatial hashing code and on using stl::sets and corresponding memory management effectively. I am currently looking again at the grid implementation on this open source SPH implementation for ideas on optimizing the spatial hashing.

Anyway, for now I have shouldered that part and started on the texturing and environment mapping. Unlike the above, the implementation for this is relatively straightforward; I think texturing is somewhat working now, but I still need to tweak the OpenGL lighting/materials to get more shininess on the liquid. I've been familiarizing myself with GLSL for the cube mapping and it's also proving pretty manageable to use, so I'm also hoping to get that completed in the very near future. That's all for now; hopefully more to come very soon.

Anyway, for now I have shouldered that part and started on the texturing and environment mapping. Unlike the above, the implementation for this is relatively straightforward; I think texturing is somewhat working now, but I still need to tweak the OpenGL lighting/materials to get more shininess on the liquid. I've been familiarizing myself with GLSL for the cube mapping and it's also proving pretty manageable to use, so I'm also hoping to get that completed in the very near future. That's all for now; hopefully more to come very soon.

Thursday, April 14, 2011

Efficiency Idea/Algorithm

Since my current efficiency bottleneck right now is in the cube marching step, I've been thinking about a way to get my algorithm to only sample grid cells likely to be at the border of the implicit surface. So after some serious brainstorming, I thought of a simple idea that should make the cube marching algorithm run significantly faster for me.

The idea basically exploits the fact that the implicit blobby surface should look topologically very similar to the list of spheres that currently approximates the surface in my fluid dynamics algorithm. Specifically, only very local spheres should have an impact on the implicit surface at any point. Basically I would like the implicit surface to look like the spheres except with the blobby metaball effect between very nearby ones. With that in mind, I came up with this algorithm that should allow for a much finer subdivision of my grid without sampling every point. Rather than a cube marching algorithm, it now ends up being more of a cube finding method in that it only samples voxel cubes that are nearby the implicit surface.

I can also reduce the time it takes to sample a point by using spatial hashing on the heuristic that far away spheres should not have an influence on the surface. To do this, I simply need to modify my spatial hashing grid such that it can take an arbitrary position and return all nearby spheres. Sampling can then be re-written as:

Analysis:

Since we are looping through each of n particles, and for each particle dividing into x segments along each dimension, we get a total of n*x3 cells to try to add to the set. In the worst case, adding to the set takes O(lg n*x3) time, so for the first setup part of the algorithm we have an upper bound of O(n*x3lg nx3) = O(n*x3(3lg x+lg n)).

For the sampling part, assuming spatial hashing reduces the evaluation of the surface to O(nh), since we are sampling up to O(8n*x3) grid points, the running time upper bounds at O(8n*nh*x3). Since x is used to define amount of subdivisions per sphere exactly and will be some constant, such as 10, and nh should dominate the lg n term, the entire algorithm is thus upper-bounded by O(n*nh), same as the dynamics part of the algorithm. In practice, the constant of order 1000-10000 will be significant in slowing down the running time, this can be somewhat reduced by lowering the value of x, and the overall running time will still be significantly faster since the algorithm would no longer be evaluating the surface at each of the X3 grid cells, where X is the number of subdivisions per dimension in the entire grid space.

______

So for now, I am working on putting this idea into practice and seeing if it will significantly speed up my algorithm to allow for much finer grid resolution. I am also working on texturing so that I can put environment mapping in. On Joe's recommendation I am using glsl to do the environment mapping, which looks like it should be a really nice simple implementation. In the mean time, any thoughts/comments on the feasibility of the above algorithm would be much appreciated

The idea basically exploits the fact that the implicit blobby surface should look topologically very similar to the list of spheres that currently approximates the surface in my fluid dynamics algorithm. Specifically, only very local spheres should have an impact on the implicit surface at any point. Basically I would like the implicit surface to look like the spheres except with the blobby metaball effect between very nearby ones. With that in mind, I came up with this algorithm that should allow for a much finer subdivision of my grid without sampling every point. Rather than a cube marching algorithm, it now ends up being more of a cube finding method in that it only samples voxel cubes that are nearby the implicit surface.

for-each particle

1.Get a loose boundary B of the sphere (e.g. a bounding box extended such that its width is 2*(the desired radius)

2.Divide B into x segments and "project" (e.g. round down) to nearest grid cell**

-Each cell can be defined by a unique integer id based on position

3.Add each of these cells to a set of integers s- prevents duplicates

---------------------------------------

in cube-marching: for each cell in s

1. sample that cell as in cube-marching

**Get the nearest grid cell as follows: e.g. In 1-D case, if grid cell size is .01 a segment at .016 might round down to .01. Likewise .026 would round down to .02. Note also that grid cell size should be set equal to the bounding_width/x 1.Get a loose boundary B of the sphere (e.g. a bounding box extended such that its width is 2*(the desired radius)

2.Divide B into x segments and "project" (e.g. round down) to nearest grid cell**

-Each cell can be defined by a unique integer id based on position

3.Add each of these cells to a set of integers s- prevents duplicates

---------------------------------------

in cube-marching: for each cell in s

1. sample that cell as in cube-marching

I can also reduce the time it takes to sample a point by using spatial hashing on the heuristic that far away spheres should not have an influence on the surface. To do this, I simply need to modify my spatial hashing grid such that it can take an arbitrary position and return all nearby spheres. Sampling can then be re-written as:

sample(point a) {

get list l of all spheres in a from the spatial hashing grid

compute the implicit surface at this point using only nearby spheres

}

get list l of all spheres in a from the spatial hashing grid

compute the implicit surface at this point using only nearby spheres

}

Analysis:

Since we are looping through each of n particles, and for each particle dividing into x segments along each dimension, we get a total of n*x3 cells to try to add to the set. In the worst case, adding to the set takes O(lg n*x3) time, so for the first setup part of the algorithm we have an upper bound of O(n*x3lg nx3) = O(n*x3(3lg x+lg n)).

For the sampling part, assuming spatial hashing reduces the evaluation of the surface to O(nh), since we are sampling up to O(8n*x3) grid points, the running time upper bounds at O(8n*nh*x3). Since x is used to define amount of subdivisions per sphere exactly and will be some constant, such as 10, and nh should dominate the lg n term, the entire algorithm is thus upper-bounded by O(n*nh), same as the dynamics part of the algorithm. In practice, the constant of order 1000-10000 will be significant in slowing down the running time, this can be somewhat reduced by lowering the value of x, and the overall running time will still be significantly faster since the algorithm would no longer be evaluating the surface at each of the X3 grid cells, where X is the number of subdivisions per dimension in the entire grid space.

______

So for now, I am working on putting this idea into practice and seeing if it will significantly speed up my algorithm to allow for much finer grid resolution. I am also working on texturing so that I can put environment mapping in. On Joe's recommendation I am using glsl to do the environment mapping, which looks like it should be a really nice simple implementation. In the mean time, any thoughts/comments on the feasibility of the above algorithm would be much appreciated

Wednesday, April 6, 2011

Slow Progress and Smooth Shading

With the beta review coming up, I finally have some results to show on the implicit surface reconstruction side. The problem is, it runs no where close to real time except at extremely low grid resolution/ poly count. However, you can sort of start to get the idea. Also, with surface normals computed, I have smooth shading working as well. I am currently using an adapted version of the open source marching cube code by Cory Gene Bloyd from this site. I am now thinking in terms of future approach that it is possible that using hierarchical bounding volumes and having an adaptively sized grid might make this algorithm work fast enough at least for low spatial separation.

Anyhow, here's what I currently have:

Anyhow, here's what I currently have:

Thursday, March 31, 2011

Short update: Interaction and Implicit Progress

I'm planning on doing a bigger update a little before the beta review, so this will probably be one of my shorter posts. What with quite a few projects going on this week, I was not able to get done everything for the Beta Review yet. However, over the next few days I have a mysterious lull in work from other classes, so I should be posting more progress in a couple days. As planned, I have finished up basic user interaction - the main difference from the final implementation with the pseudocode from last week is that I had to use impulse rather than straight-up force to modify fluid behavior because of the way force is currently zeroed at the beginning of each time step. (I might change this later). I finally put in an fps tracker as well - I have it display on the GUI status bar at the bottom - right now the simulation is ranging around 2 fps for the 1000 particle set, and around 20-some for the 100 particle set on my laptop.

I'm still working on the implicit reconstruction, mainly going back and doing a bunch more background reading on feasible solutions, such as:

http://research.microsoft.com/en-us/um/people/hoppe/psrecon.pdf

http://web.mit.edu/manoli/crust/www/crust.html

http://physbam.stanford.edu/~fedkiw/papers/stanford2001-03.pdf

In particular, this paper discusses a method which might be useful which is particularly suited to being faster and more memory light. Essentially, it uses a level set method, interpolating the zero isosurface of the level set function. A simplified version of the motion of the surface is also embedded in addition to the surface, which might be a good way to get fast deformations without having to recompute the entire mesh from scratch.

UPDATE:

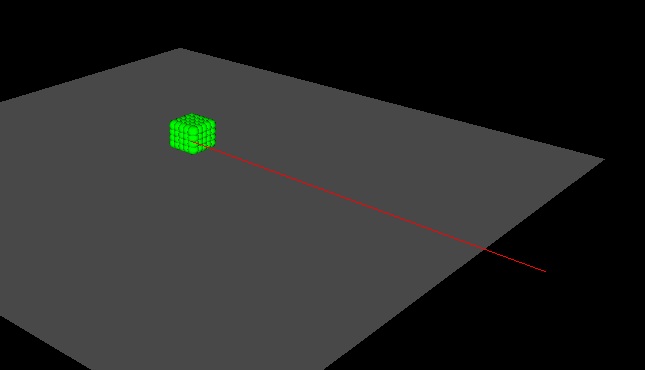

Here is a video of user interaction working. The video also shows how clicking shoots a ray from the camera. The framerate is now displayed in the lower left corner of the status bar.

For next week and the beta review, same plan as before: focus on the implicit formulation.

I'm still working on the implicit reconstruction, mainly going back and doing a bunch more background reading on feasible solutions, such as:

http://research.microsoft.com/en-us/um/people/hoppe/psrecon.pdf

http://web.mit.edu/manoli/crust/www/crust.html

http://physbam.stanford.edu/~fedkiw/papers/stanford2001-03.pdf

In particular, this paper discusses a method which might be useful which is particularly suited to being faster and more memory light. Essentially, it uses a level set method, interpolating the zero isosurface of the level set function. A simplified version of the motion of the surface is also embedded in addition to the surface, which might be a good way to get fast deformations without having to recompute the entire mesh from scratch.

UPDATE:

Here is a video of user interaction working. The video also shows how clicking shoots a ray from the camera. The framerate is now displayed in the lower left corner of the status bar.

For next week and the beta review, same plan as before: focus on the implicit formulation.

Thursday, March 24, 2011

Self-Evaluation/Reality Check/Future Game Plan

With just over a week until the beta review, here is an evaluation of my progress and what is left to do.

Looking back at the list of final deliverables, I feel like I still have quite a bit left so I'm trying not to feel too much pressure (deep breaths) but I know I still have quite a bit of work ahead of me.

On the other hand, looking back I feel like I've already accomplished a lot, including all the preliminary research, GUI and camera setup, particle framework, spatial hash table, fluid dynamics, and adding in parameter control. It would have been nice to have gotten farther along in the surface reconstruction at this point, but other than that I think I'm somewhat on par with my suggested timeline.

I really want to have time to get to the haptics, because I think that will make the end product a lot more dynamic and interesting to play with but I am aware that with the time constraints it is more important to get what I have working fully and play the haptics part by ear. With this in mind, I have devised a list of the necessary parts of the project that need to get finished and the other things that I want to do but are not as important and are more sort-of "icing-on-the-cake" deliverables.

Most important things to get working:

Looking back at the list of final deliverables, I feel like I still have quite a bit left so I'm trying not to feel too much pressure (deep breaths) but I know I still have quite a bit of work ahead of me.

On the other hand, looking back I feel like I've already accomplished a lot, including all the preliminary research, GUI and camera setup, particle framework, spatial hash table, fluid dynamics, and adding in parameter control. It would have been nice to have gotten farther along in the surface reconstruction at this point, but other than that I think I'm somewhat on par with my suggested timeline.

I really want to have time to get to the haptics, because I think that will make the end product a lot more dynamic and interesting to play with but I am aware that with the time constraints it is more important to get what I have working fully and play the haptics part by ear. With this in mind, I have devised a list of the necessary parts of the project that need to get finished and the other things that I want to do but are not as important and are more sort-of "icing-on-the-cake" deliverables.

Most important things to get working:

- Surface reconstruction (in progress)

- User Interaction (in progress, almost done)

- Environment mapping and smooth shading

- Optimized running time

- Haptic control and force feedback (arguably should go at bottom of other list)

- Improved User Interaction (e.g. rigid bodies)

- Tweaking particle dynamics / parameters

- Small GUI things (e.g. saving/loading materials, etc.)

- Index of refraction

- Some form of implicit surface reconstruction

- Click-drag user interaction

Implicit Problems and Other Oddities

Well, what with lots of debugging and little sleep, this week was not nearly as productive as I had hoped but on the bright side at least I am dealing with these issues now as opposed to in a couple weeks. The majority of this week was spent with the implicit version of the particle sim and trying to get it to work in some form as well as doing some more reading and re-reading on options for (fast) surface reconstruction.

I think the main problem I am having right now is I don't have a way of visually debugging, so I'm not really sure how close I am to having the ray-marching work. I'm just getting a lot of ill-formed shapes, so I've gone back and am now redoing the surface reconstruction in smaller easy-to-check parts. I'm also wary that this method is bound to be pretty slow, so I'm still looking into the other ways of reconstructing the implicit surface once I'm sure it's setup correctly. The original Witkin-Heckbert particle constraint idea is looking less appealing given it is quite a lot of code and still only generates points which must then be polygonized in real-time as well, so suffice it to say, I'm struggling a little with this part of the project right now.

On a side note, I have also been working on smaller things such as the user interaction forces now that I have a real camera setup (see last couple posts) and actually have access to such info as the view plane. Not quite finished with this either, but should be soon. My current plan/pseudo-code for the basic interaction forces:

Anyway, that's it for now. Hopefully some more videos to come soon, but I'll save the "future plans" part of this post for the self-critique which I should be putting up shortly.

I think the main problem I am having right now is I don't have a way of visually debugging, so I'm not really sure how close I am to having the ray-marching work. I'm just getting a lot of ill-formed shapes, so I've gone back and am now redoing the surface reconstruction in smaller easy-to-check parts. I'm also wary that this method is bound to be pretty slow, so I'm still looking into the other ways of reconstructing the implicit surface once I'm sure it's setup correctly. The original Witkin-Heckbert particle constraint idea is looking less appealing given it is quite a lot of code and still only generates points which must then be polygonized in real-time as well, so suffice it to say, I'm struggling a little with this part of the project right now.

On a side note, I have also been working on smaller things such as the user interaction forces now that I have a real camera setup (see last couple posts) and actually have access to such info as the view plane. Not quite finished with this either, but should be soon. My current plan/pseudo-code for the basic interaction forces:

1. Qt signals user click

2. Store the the location of the click at c.last

3. interactionClick <- TRUE

3. interactionClick <- TRUE

4. Shoot Ray out from camera eye position through the clicked position in world space

5. //This step takes O(n) time:

For each particle at position p do a ray-sphere intersection with the sphere centered at p with radius r.

d <- SphereIntersect(p,r);

//returns -1 if no hit otherwise the closest distance d from p to Ray

//Note that d <= r

if (d != -1) { //there was an intersection

tag this particle p and store its d

}

...................

//In next time steps - if interactionClick == TRUE

6. Qt signals user drag with mouse clicked at c.current

7. dDrag <- c.current - c.last //dDrag should be projected so that parallel to view plane

8. For each particle p that is tagged

Compute a force f as with magnitude a function of p.d and lengthOf(dDrag)

f points in direction of dDdrag

Store f with that particle to be applied in external forces step of simulation

Store f with that particle to be applied in external forces step of simulation

9. c.last <- c.current

....................

10. OnMouseRelease()

Remove tags from all particles

interactionClick <- FALSE

Remove tags from all particles

interactionClick <- FALSE

Anyway, that's it for now. Hopefully some more videos to come soon, but I'll save the "future plans" part of this post for the self-critique which I should be putting up shortly.

Thursday, March 17, 2011

Refinements and Implicit Attempts

So with the craziness of this week, trying to juggle a bunch of different projects and then going to California to look for housing for next year, it has taken me a while to get up the final version of this blog post, but things seem to moving along. I have finished adding in most of the refinements I had mentioned last post. Specifically, I finished redoing the camera class, so that is is actually now storing all the relevent information (view reference point, eye position, view up vector, view plane distance etc., and using gluLookAt to do the camera aiming). Then as a first step toward getting the interactivity working and to show off the newfound power of the camera class, I set it up so that everything when you Shift+click on a point, it draws a ray from the camera eye through that point (see screenshot below):

I used the camera class from some of the CIS462 homeworks as an extensive reference for setting up the camera. Once I got the transformations working it was relatively straightforward to then modify the main class to then interface with these methods instead of directly modifying the values of the Camera struct. For shooting the ray, looking back at the CIS277 course notes was extremely helpful in remembering how to convert the 2D screen coordinates to the 3D world coordinates using the camera up vector, eye position, and center.

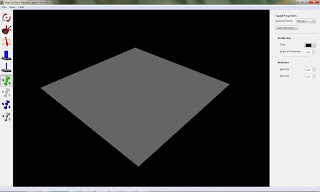

Additionally, I also modified the GUI as well as the underlying framework to be able to actually change all the parameters (everything but index of refraction) including color from within the GUI during the simulation. Note in the screenshot to the left all the parameters from last post are now directly manipulable from within the simulation.

Additionally, I also modified the GUI as well as the underlying framework to be able to actually change all the parameters (everything but index of refraction) including color from within the GUI during the simulation. Note in the screenshot to the left all the parameters from last post are now directly manipulable from within the simulation.

It is turning out to be less trivial than expected to code in the implicit formulation framework and then get that to interface with the rest of the program, especially figuring out indexing and how to define the isolevel within each grid cell. I am continuing to work on building the implicit formulation into the code and experimenting with the Paul Bourke surface reconstruction code.

So the plan right now is to continue working on this, hopefully getting some sort of visualization in the near future. Smaller things can also be tweaked, but for now I think the implicit surface recon is the main thing. Also, now that the ray shooting is working, I'm hoping to finally get some simple drag-click interaction forces in. More to come soon

I used the camera class from some of the CIS462 homeworks as an extensive reference for setting up the camera. Once I got the transformations working it was relatively straightforward to then modify the main class to then interface with these methods instead of directly modifying the values of the Camera struct. For shooting the ray, looking back at the CIS277 course notes was extremely helpful in remembering how to convert the 2D screen coordinates to the 3D world coordinates using the camera up vector, eye position, and center.

Additionally, I also modified the GUI as well as the underlying framework to be able to actually change all the parameters (everything but index of refraction) including color from within the GUI during the simulation. Note in the screenshot to the left all the parameters from last post are now directly manipulable from within the simulation.

Additionally, I also modified the GUI as well as the underlying framework to be able to actually change all the parameters (everything but index of refraction) including color from within the GUI during the simulation. Note in the screenshot to the left all the parameters from last post are now directly manipulable from within the simulation.It is turning out to be less trivial than expected to code in the implicit formulation framework and then get that to interface with the rest of the program, especially figuring out indexing and how to define the isolevel within each grid cell. I am continuing to work on building the implicit formulation into the code and experimenting with the Paul Bourke surface reconstruction code.

So the plan right now is to continue working on this, hopefully getting some sort of visualization in the near future. Smaller things can also be tweaked, but for now I think the implicit surface recon is the main thing. Also, now that the ray shooting is working, I'm hoping to finally get some simple drag-click interaction forces in. More to come soon

Thursday, March 3, 2011

Interactions, tweaks and start of implicitness

So as this week has involved scrabbling to complete movies, midterms, and smoke simulations, and general lack of what we might consider necessary doses of sleep, overall it was not extremely productive week on the senior project front. Also, as I am currently finishing this blog post at a resort, I am paying dearly for internet/computer access right now, so I will keep this pretty brief. I am still working on adding in user interaction to my GUI, which is proving more difficult than anticipated due to my simple camera implementation (which is just using the glTranslate and glRotate methods right now). I am thinking of upgrading my camera class so that I can use gluLookAt instead of relying on rotating the world ' this will make shooting rays and picking objects much easier since I will have the eye position already stored. For now, I am thinking of just the click drag -> apply force in that direction schema, which should integrate in nicely with my framework. Later, if there´s time, I can use the rigid bodies class I added into the framework to be able to have a "virtual tool" for interacting with the mercury (see the interaction tool buttons on my GUI), but I´m thinking I might just make this an option for haptics anyway. In terms of speeding up the simulation, I think choosing a better hash function for my spatial hashing data structure should help. Other smaller improvements to my program I´m working on:

f(x) = bias - sum(for each particle i)<exp(-abs(x-c)/(s) )> //sorry that´s illegible, I´m using a spanish keyboard

where c is the location of the particle and s is a standard devation for the particle. I am going to start by tyring to construct the surface using the open source marching cubes algorithm and see what sort of visualization adn framerate I get on that to start. Then I will look to the other possible methods for surface reconstruction (Witkin floter particle constrain method, etc.) I´ve been scrounging the web for other possible approaches that might work. Anyhow, that´s all for now. When I return from my vacation I will be hopefully much more well rested and ready to start on some implicit surface reconstruction!

- Adding in more parameters to the GUI and actually having slots to make the simulation respond to changing the parameters. The ones I´m adding (right now these are all just predefined constants):

- k - the pressure constant for computing pressure

- k_near - the near pressure constant for near pressure (increasing this causes less clustering by repelling ver close particles)

- k_spring - the spring constant for springs between particles

- gamma - elasticity constant

- alpha - plasticity constant

- sigma - linear viscosity term

- mu - friction term between particles and rigid bodies

- rho_0 ' the rest or desired density of the particles

- these all mostly come from the Clavet paper

- while some of these parameters will proably not be in the final version of the application, I think it will be very useful to be able to experiment with teaking different parameters in real time and will make the program more dynamic. Allowing the user to change the particle color should also be pretty trivial.

- Adding a framework tracker to get a read on the exact framerate - will be helpful in quantifying performance and tyring to get a read on the exact framerate - will be helpful in quantifying performance and trying to get a speedup (I think for simplicity I might just adapt the one from the CIS563 base code - the mmc::FpsTracker class)

f(x) = bias - sum(for each particle i)<exp(-abs(x-c)/(s) )> //sorry that´s illegible, I´m using a spanish keyboard

where c is the location of the particle and s is a standard devation for the particle. I am going to start by tyring to construct the surface using the open source marching cubes algorithm and see what sort of visualization adn framerate I get on that to start. Then I will look to the other possible methods for surface reconstruction (Witkin floter particle constrain method, etc.) I´ve been scrounging the web for other possible approaches that might work. Anyhow, that´s all for now. When I return from my vacation I will be hopefully much more well rested and ready to start on some implicit surface reconstruction!

Thursday, February 24, 2011

Spatial Hashing and Blobbiness(!)

So though I have not yet gotten to add any real user interaction to the program, I'm pretty pleased with my progress for the week.

It turns out the Heckbert particle data structure was doing too much to be worth using for this part of the project since I only need a simple spatial data structure with a constant radius of interaction. I coded up a very basic spatial hash grid. Right now, the domain is finite for simplicity but I think if I choose a good hash function I should be able to essentially only create buckets where there are particles in the bucket. For now though, the grid is large enough to address any animations I would want to simulate and fine enough to get save on search time. The data structure is able to find all particles within the radius of interaction (which is also the grid size) by only searching within its own bucket and the neighboring 26 buckets instead of searching the entire space. I wrote a test program to make sure the spatial hashing grid is able to correctly find its neighbors. Every time step, the grid is regridded by looping through the particles and updating their buckets in O(n). Here is a video of the test program running:

The selected particle is in blue (I am using the arrow keys to toggle the selection) and its neighbors are colored red. I added some random particle movement to test that the regridding is working correctly. The boundaries of the grid are also shown.

I have implemented Lagrangian viscoelastic fluid dynamics in a method very similar to the Clavet et al. paper. The springs were tricky to get stable, and required some tweaking. Here is an entertaining video of the entire thing blowing up while debugging the springs.

Even with the speedup from the spatial data structure, I am still having difficulty getting it to run at real time for 1000 particles. Running at 100 particles though, I am still able to get enough resolution to see droplets forming. And best of all, I was able to get some blobby surface tension effects by tweaking the rest pressure parameter. The parameters could still use some more tweaking, e.g. probably way too much elasticity and viscosity right now, but I'm pretty happy with my progress. Anyway, here are some videos of my blobby particles:

For next week, I am planning on trying to speedup the framerate, starting to add in user interaction and working on getting the implicit formulation working. It might be worth trying a simple marching cubes method just to see how fast it is, vis a vis this openSource implementation Joe sent me: http://paulbourke.net/geometry/polygonise/

Alpha reviews coming up... hope it goes okay

It turns out the Heckbert particle data structure was doing too much to be worth using for this part of the project since I only need a simple spatial data structure with a constant radius of interaction. I coded up a very basic spatial hash grid. Right now, the domain is finite for simplicity but I think if I choose a good hash function I should be able to essentially only create buckets where there are particles in the bucket. For now though, the grid is large enough to address any animations I would want to simulate and fine enough to get save on search time. The data structure is able to find all particles within the radius of interaction (which is also the grid size) by only searching within its own bucket and the neighboring 26 buckets instead of searching the entire space. I wrote a test program to make sure the spatial hashing grid is able to correctly find its neighbors. Every time step, the grid is regridded by looping through the particles and updating their buckets in O(n). Here is a video of the test program running:

The selected particle is in blue (I am using the arrow keys to toggle the selection) and its neighbors are colored red. I added some random particle movement to test that the regridding is working correctly. The boundaries of the grid are also shown.

I have implemented Lagrangian viscoelastic fluid dynamics in a method very similar to the Clavet et al. paper. The springs were tricky to get stable, and required some tweaking. Here is an entertaining video of the entire thing blowing up while debugging the springs.

Even with the speedup from the spatial data structure, I am still having difficulty getting it to run at real time for 1000 particles. Running at 100 particles though, I am still able to get enough resolution to see droplets forming. And best of all, I was able to get some blobby surface tension effects by tweaking the rest pressure parameter. The parameters could still use some more tweaking, e.g. probably way too much elasticity and viscosity right now, but I'm pretty happy with my progress. Anyway, here are some videos of my blobby particles:

For next week, I am planning on trying to speedup the framerate, starting to add in user interaction and working on getting the implicit formulation working. It might be worth trying a simple marching cubes method just to see how fast it is, vis a vis this openSource implementation Joe sent me: http://paulbourke.net/geometry/polygonise/

Alpha reviews coming up... hope it goes okay

Thursday, February 17, 2011

Unstable Particles = Not Quite Liquid

So after setting up the particle system last week, this week I attempted to start getting it to behave as a fluid. Mostly, I have been closely following the Clavet paper, which is essentially simplified SPH with viscoelastic effects, but the fact that we are covering fluid sim in Physically Based Animation definitely helps.

Right now, however, I have hit up against some problems. Although at least there are visible particle and they are moving, right now I am running into some weird errors, such as that the particles are flying off of the plane, so I have had to reign back on some of the effects such as viscosity, etc. until I can figure out was is going on. I also am currently using a simple arrayList (c++ std::vector) as the particle data structure, making the spring effects intractable in real time, so I am now trying to switch everything over to using the Heckbert spatial hashing grid data structure that I added to my framework.

Anyway, here is the video of the unstable particles. If you squint your eyes, I guess it sort of looks like a liquid...

Although I had hoped to start on the implicit representation soon, my current plan for next week (in addition to preparing for the alpha review) is as follows:

Right now, however, I have hit up against some problems. Although at least there are visible particle and they are moving, right now I am running into some weird errors, such as that the particles are flying off of the plane, so I have had to reign back on some of the effects such as viscosity, etc. until I can figure out was is going on. I also am currently using a simple arrayList (c++ std::vector) as the particle data structure, making the spring effects intractable in real time, so I am now trying to switch everything over to using the Heckbert spatial hashing grid data structure that I added to my framework.

Anyway, here is the video of the unstable particles. If you squint your eyes, I guess it sort of looks like a liquid...

Although I had hoped to start on the implicit representation soon, my current plan for next week (in addition to preparing for the alpha review) is as follows:

- Switch over to using a simple spatial hashing grid (or Heckbert's fast repulsion grid) instead of std::vector

- Get some sort of reasonable looking stable fluid with at least some of the effects I mentioned last week working.

- As the main idea is that the simulation be interactive liquid, get some sort of rough user interaction working (e.g. clicking and applies a force vector).

- More background reading on high surface tension

Thursday, February 3, 2011

Physical properties and data structures

Well, this week wasn't nearly as productive as I had hoped, but I have managed to setup a basic particle system data structure and am now incorporating in the code from Heckbert's report "Fast Surface Particle Repulsion." Basically it's the framework for a 3d spatial bucket-list style grid that allows for fast access to neighboring particles and will be useful for both layering the fluid simulation effects onto the particle system and later for quickly constraining floater particles to the implicit surface formulation.

In the process of setting up my particle data structure, I have been researching what physical properties of mercury to which I can put actual numbers. So far, the most relevant properties of mercury I have been able to quantify:

Behavioral Properties

Surface Tension

Surface tension is the property exhibited by liquids that allows them to resist external forces due to the cohesive forces among liquid molecules which, while netting to zero within the liquid, result in a net internal force at the boundary. It turns out that surface tension is also responsible for the "blobby" feel of mercury as well as its tendency to form droplets or puddles instead of spreading all the way out (combined with mercury's lack of adherence to many solids), since the droplets are pulled into the spherical shape by the cohesive forces at the liquid surface. Note the blobby behavior of the high surface tension liquids in this movie (thanks to Joe for finding this one). Mercury's surface tension is extremely high: about 487 dyn/cm at 15 deg. Celsius, compared to water's suface tension of 71.97 dyn/cm at 25 degrees Celsius.

In order to simulate such a high surface tension, it's looking likely I might have to rely on more than the double density relaxation algorithm described in Particle-based viscoelastic fluid simulation where some amount of surface tension and smoothed surface is ensured but the amount is not directly controllable. I'm hoping I'll be able to fake the increased surface tension by applying an additional cohesive force to boundary particles, but this may result in asymmetry or instability, in which case I will need to think of a different approach.

Viscosity

The viscosity of mercury is slightly more viscous than water, but much less so than honey for example.

While the actual viscosity of mercury falls at 1.526 mPa*s (compared to 0.894 for water and 2,000-10,000 for honey), I think to make the haptic interaction more interesting I will be want the user to be able to vary this

by making it more viscous.

Elasticity/Plasticity

Elasticity is the property that brings the material back to its original shape after a deformation is applied then removed. There is a linear relationship between the force applied (stress) and the relative deformation (strain). Above the elastic limit for the material, the relationship becomes nonlinear and the material is said to exhibit plasticity, meaning the substance "forgets" its past shape. Elastic modulus is the measure of an object's tendency to undergo an elastic non-permanent deformation when a force is applied to it and is defined as stress/strain. The bulk modulus of a substance is a measure of its resistance to compression or its incompressibility. Mercury has a very high Bulk Modulus (extremely incompressible) of 28.5 * 10^9 Pa, compared to water's Bulk Modulus of 2.15 * 10^9.

Like viscosity, elasticity/plasticity are part of the viscoelastic fluid formulation and can thus be worked into the system as variables that the users can change.

Density

Mercury has a density of 13,546 kg/m^3 at standard temperature and pressure, compared to water's density of 1000 kg/m^3 and gold's 19320. The density might be useful in computing an appropriate force feedback.

Reflectivity

Mercury's reflectivity is relatively high at 73%. I hope to simulate the reflectivity using basic environment mapping.

Index of Refraction

Mercury has a low index of refraction at 1.000933 (compared to water which 1.33), which will result in essentially no refraction in a vacuum. However, if there is time, I hope to parametrize this property as well, so there would have to be some perturbation of the environment map based on the amount of refraction.

In the process of setting up my particle data structure, I have been researching what physical properties of mercury to which I can put actual numbers. So far, the most relevant properties of mercury I have been able to quantify:

Behavioral Properties

- Surface tension

- Viscosity

- Elasticity/Plasticity

- Density

- Reflectivity

- Index of Refraction

Surface Tension

Surface tension is the property exhibited by liquids that allows them to resist external forces due to the cohesive forces among liquid molecules which, while netting to zero within the liquid, result in a net internal force at the boundary. It turns out that surface tension is also responsible for the "blobby" feel of mercury as well as its tendency to form droplets or puddles instead of spreading all the way out (combined with mercury's lack of adherence to many solids), since the droplets are pulled into the spherical shape by the cohesive forces at the liquid surface. Note the blobby behavior of the high surface tension liquids in this movie (thanks to Joe for finding this one). Mercury's surface tension is extremely high: about 487 dyn/cm at 15 deg. Celsius, compared to water's suface tension of 71.97 dyn/cm at 25 degrees Celsius.

In order to simulate such a high surface tension, it's looking likely I might have to rely on more than the double density relaxation algorithm described in Particle-based viscoelastic fluid simulation where some amount of surface tension and smoothed surface is ensured but the amount is not directly controllable. I'm hoping I'll be able to fake the increased surface tension by applying an additional cohesive force to boundary particles, but this may result in asymmetry or instability, in which case I will need to think of a different approach.

Viscosity

The viscosity of mercury is slightly more viscous than water, but much less so than honey for example.

While the actual viscosity of mercury falls at 1.526 mPa*s (compared to 0.894 for water and 2,000-10,000 for honey), I think to make the haptic interaction more interesting I will be want the user to be able to vary this

by making it more viscous.

Elasticity/Plasticity

Elasticity is the property that brings the material back to its original shape after a deformation is applied then removed. There is a linear relationship between the force applied (stress) and the relative deformation (strain). Above the elastic limit for the material, the relationship becomes nonlinear and the material is said to exhibit plasticity, meaning the substance "forgets" its past shape. Elastic modulus is the measure of an object's tendency to undergo an elastic non-permanent deformation when a force is applied to it and is defined as stress/strain. The bulk modulus of a substance is a measure of its resistance to compression or its incompressibility. Mercury has a very high Bulk Modulus (extremely incompressible) of 28.5 * 10^9 Pa, compared to water's Bulk Modulus of 2.15 * 10^9.

Like viscosity, elasticity/plasticity are part of the viscoelastic fluid formulation and can thus be worked into the system as variables that the users can change.

Density

Mercury has a density of 13,546 kg/m^3 at standard temperature and pressure, compared to water's density of 1000 kg/m^3 and gold's 19320. The density might be useful in computing an appropriate force feedback.

Reflectivity

Mercury's reflectivity is relatively high at 73%. I hope to simulate the reflectivity using basic environment mapping.

Index of Refraction

Mercury has a low index of refraction at 1.000933 (compared to water which 1.33), which will result in essentially no refraction in a vacuum. However, if there is time, I hope to parametrize this property as well, so there would have to be some perturbation of the environment map based on the amount of refraction.

Some useful online sources I have found that give various physical properties of mercury.

http://environmentalchemistry.com/yogi/periodic/Hg.html

http://www.engineeringtoolbox.com/bulk-modulus-elasticity-d_585.html

http://en.wikipedia.org/wiki/Viscosity

http://en.wikipedia.org/wiki/Plasticity_%28physics%29

http://en.wikipedia.org/wiki/Elastic_modulus

http://en.wikipedia.org/wiki/Surface_tension

Also, this paper discusses the reflectivity of Mercury

http://environmentalchemistry.com/yogi/periodic/Hg.html

http://www.engineeringtoolbox.com/bulk-modulus-elasticity-d_585.html

http://en.wikipedia.org/wiki/Viscosity

http://en.wikipedia.org/wiki/Plasticity_%28physics%29

http://en.wikipedia.org/wiki/Elastic_modulus

http://en.wikipedia.org/wiki/Surface_tension

Also, this paper discusses the reflectivity of Mercury

Basic GUI

So, after a nice debilitating bout of the stomach flu that seems to have been going around, I finally finished the basic GUI design, more or less as I had planned it out (see diagram from last post).

I made extensive use of the Qt library in constructing my GUI (and yes Joe, it is much much nicer than MFC so thanks for the save on that one). Taking Yiyi's advice into consideration, I added some shortcuts to hide the sidebar and toolbars so that the main openGL window can scale up.

I also implemented a very simple maya-style camera with buttons to "pan", "tumble", and "scale"(please excuse my terrible sense of humor with the icon images. And yes, it's supposed to be a tumbler). Also, same keyboard shortcuts to do the same. Rob Bateman's site was an extremely helpful resource for setting the camera up.

There's obviously still a lot to be wanting in the design, such as more parameters in the side toolbox, a floating axis in the corner of the openGL window and perhaps more interaction tools, but this should give me a framework to work from for the time being. Meanwhile, I have also been setting up the as of now invisible particle system framework, which should hopefully become visible in the near future. I've also been reading and re-reading some papers on viscoelastic fluids. Right now, this one is looking to be the closest to what I am going to attempt to implement.

Anyway, here are some screenshots of my GUI and a video capture of my using the camera. More to come soon!

I made extensive use of the Qt library in constructing my GUI (and yes Joe, it is much much nicer than MFC so thanks for the save on that one). Taking Yiyi's advice into consideration, I added some shortcuts to hide the sidebar and toolbars so that the main openGL window can scale up.

I also implemented a very simple maya-style camera with buttons to "pan", "tumble", and "scale"(please excuse my terrible sense of humor with the icon images. And yes, it's supposed to be a tumbler). Also, same keyboard shortcuts to do the same. Rob Bateman's site was an extremely helpful resource for setting the camera up.

There's obviously still a lot to be wanting in the design, such as more parameters in the side toolbox, a floating axis in the corner of the openGL window and perhaps more interaction tools, but this should give me a framework to work from for the time being. Meanwhile, I have also been setting up the as of now invisible particle system framework, which should hopefully become visible in the near future. I've also been reading and re-reading some papers on viscoelastic fluids. Right now, this one is looking to be the closest to what I am going to attempt to implement.

Anyway, here are some screenshots of my GUI and a video capture of my using the camera. More to come soon!

Thursday, January 20, 2011

GUI Design

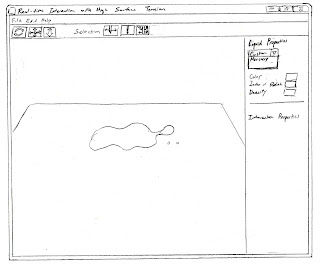

Here's an early sketch of the plan for the GUI. Note I have left lots of extra room to allow for more features, time permitting.

Mercury Reference Videos

Some cool videos I found with people playing with mercury. Note its high surface tension, blobbiness, high reflectivity, and tendency to break into small spherical droplets. The mercury also appears to leave very little residue and to be of higher viscosity than water:

Playing with Mercury

In my project, I'll be exploring how I can use the computer to interact and represent a mercury-like fluid in real-time, an example of material which can often not be interacted with in a non-virtual environment, either because of safety concerns (as is the case with mercury) or because the material simply doesn’t exist. The project will take advantage of recent speedups in processing power to allow for real-time rendering of mercury and similar viscous fluids using an implicit surfaces representation and fluid dynamics. Specifically, I would like to simulate the “blob-like” nature of mercury as well as other physical properties such as high surface tension. The ultimate goal of this project is to then interface with a haptic device (the Phantom Omni to be specific) to provide the user something that will hopefully look and feel like real mercury or at least like something novel.

So far I've mainly been reading up on various literature and working on setting up the basic MFC GUI framework (more on this coming soon). As far as I can tell, the basic game plan is as follows:

Implicit Surface Formulation

- Use a relatively small number of particles to create a particle-based viscoelastic fluid simulation, tweaking the parameters (e.g. no plasticity, no stickiness) till I get something that looks reasonably like mercury - or at least something that appears to have high surface tension

- Use the particles to get an implicit (metaball) formulation of the surface - e.g. the sum of spherical gaussian functions, the center of each located at the center of the particles.

- Now, to render the implicit formulation, constrain "floater" particles to the implicit surface and use repulsion and fission to adaptively get a uniform distribution.

- Polygonize these points into a triangle mesh.

- Render the mesh in real time with OpenGL using smooth shading and environmental mapping for the reflection.

- Allow for interaction with the liquid, e.g. mouse strokes exert an external force on the particles

- Interface with the Omni haptic device to allow for applying forces and force feedback

So that's the basic plan. As you can see, there is quite a bit to do, barring any unforeseen issues. However, hopefully by sticking to a tight schedule, I will be able to accomplish most (if not all) of these goals on time.

Subscribe to:

Posts (Atom)